This post is also available in: Italian

Reading Time: 5 minutesStorage is an important part of a virtual infrastructure, but compared with the server part (that provide computational “power”) the different storage vendor’s solutions are not so homogeneous and easy to compare or understand. Of course because there are different positioning but also a lot of different aspects, but in most cases, the most important aspects are also the “hidden” or the less described.

What is probably clear is the difference between local storage (DAS) and shared storage (SAN or NAS, but sometimes also some kind of DAS) and why shared storage is so important: not necessary for the performance aspect, but mainly because it is required by design! In a virtual infrastructure for system virtualization (VMware vSphere, Microsoft Hyper-V, Citrix XenServer, KVM, …) a shared storage is required to provide some core features (like HA and VM hot-migration)… This could change in the future (for example let’s consider Marathon everRun VM without a shared storage), but actually is just a requirement. And of course also a plus, because it can provide different other improvements.

What is probably clear is the difference between local storage (DAS) and shared storage (SAN or NAS, but sometimes also some kind of DAS) and why shared storage is so important: not necessary for the performance aspect, but mainly because it is required by design! In a virtual infrastructure for system virtualization (VMware vSphere, Microsoft Hyper-V, Citrix XenServer, KVM, …) a shared storage is required to provide some core features (like HA and VM hot-migration)… This could change in the future (for example let’s consider Marathon everRun VM without a shared storage), but actually is just a requirement. And of course also a plus, because it can provide different other improvements.

But for specific infrastructure you can also plan to use local storage (for example see the post about the VDI infrastructure design). But this could be also applied to other cases, for where the redundancy, replication, failover, failback, … are handled at application layer (like in Exchange DAG architecture).

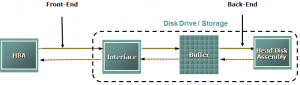

Another difference is in the different protocols on the front-end side (the part closer with the hosts):

Another difference is in the different protocols on the front-end side (the part closer with the hosts):

- SAN can use iSCSI or FC (FC, FCoE, …)

- NAS can use NFS or CIFS/SMB

There are some relevant differences between a block level access provided by SAN compared to a file level access provided by NAS (I will discuss about them on a future post), but the protocols difference may be not so relevant (especially when we consider iSCSI 10Gbps vs FC 8Gbps or NFS vs SMB 3.0) and in some cases are just myths.

The number of front-end ports may be more relevent, but in this case for SAN storage we have to consider how the storage controllers work togher (active/active, active/passive, active/standy) and which kind of multi-path module could be used.

On the storage back-end (near the disks) the type and number of disks, type of RAID, type of cache could be really important to define the performances… but we could not forget the front-end part that could become the real bottleneck of our storage (especially when we use fast disks and/or fast caches). Storage scalability could solve this possible issue, but only in one case.

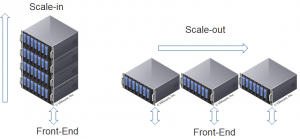

Shared storage can scale in two different way (similar on how a cluster of virtualization hosts can scale in computation power):

Shared storage can scale in two different way (similar on how a cluster of virtualization hosts can scale in computation power):

- Scale-in (vertical scaling): a storage array is expanded buy just adding more disks and/more more bays/enclosures. But the front-end part usually remain the same and could not scale.

- Scale-out (horizontal scaling): a storage array is expanded by adding other storage arrays in a single “cluster”. In this way also the front-end part is increases.

For more information see also this good post: Scale-out vs. scale-up: the basics. Of course not all the scale-out architectures are the same: usually the scalability is similar, but for example some may provide more availability than others (I will give more information in future posts).

Another way to approach the possible front-end bottleneck issue is to reduce the “gap” between the virtualization hosts and the storage and could be done in two different ways:

Another way to approach the possible front-end bottleneck issue is to reduce the “gap” between the virtualization hosts and the storage and could be done in two different ways:

- Bring some storage part near the hosts and the VMs: for example part of the cache.

- Bring the VMs inside the storage array: basically by run them directly on the storage processors.

Those solutions are really interesting especially for the scalability and performance aspects and the local storage with shared-nothing approach seems to go in this direction where storage and compunting are just a single host/block. This could be done for example by a VSA (Virtual Storage Appliance) with a good scale-out architecture.

Finally there are other aspects, like the filesystem (see for example the post on ZFS), the multi-tiering support, features for data protection, the price, the maturity, the compatibility, if is “open” or not (basically if the software part is not tightly related with the hardware part), … and so on.