This post is also available in: Italian

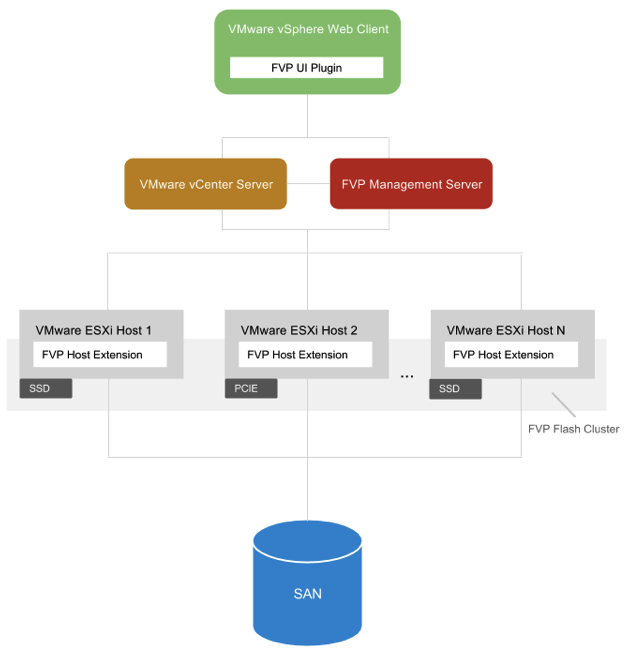

Reading Time: 6 minutesPernixData FVP is a Flash Hypervisor software aggregates server flash across a virtualized data center, creating a scale-out data tier for accelerating reads and writes to primary storage in a simple and powerful way. Was one of first (probably the first) to implement a fault-tolerant write back acceleration.

In the previous posts I’ve described the installation and the configuration procedures of FVP 1.5 on vSphere 5.5, now it’s the turn of the final considerations and comments.

Let’s start from the performance results. I’m still working in found a good set of tools for benchamark my virtual enviroments and is not so easy due to the different existing tools but also due to the limits of most of then.

More complicated is using a host cache tool like PernixData FVP and try to measure some useful and repeatable data. It’s a difficult task, considering that the cache need also some time to adapt itself.

On the PernixData site you can found those numbers:

-

Accelerate application performance. Improve performance of read and write intensive applications by 10x on average (millisecond to microsecond latency)

-

Delay a storage upgrade: PernixData FVP can deliver up to 62x more IOPS at 1/6 the cost of new SAS drives, and 32x more IOPS at 1/3 the cost of new SSDs.

-

Get more from a new storage array: You can get an additional 6x more IOPS on top of a new hybrid array, while lowering your investment in that technology by 1/2.

-

Future-proof your investment: Storage performance can scale-out linearly with compute capacity and can be architected based on specific VM requirements. This minimizes cost while maximizing application performance (today and tomorrow).

Are they real or at least reasonable?

As written is not so easy to measure the real performance improvement: first set of tests (on new storage blocks) may be slow as an un-accelerated storage, but performance goes better buy the usage and the 10x factor is closest to the real improvement.

About the storage IOPS improvement is really difficult have “magic” numbers, but for sure it can revive an old storage or a slow storage in several cases: I’ve tested with Horizon View on a simple SATA datastore (2 SATA in RAID1 on an old Dell PowerVault MD3000i) and is really impressing how much VM can you run reasonable. Without the cache 3-4 is a real limit, with the Flash Cache also with more than 20 performance was good enough. See also this post on FVP with View: PernixData FVP accelerate your Horizon View deployment.

Also the host cache can really reduce both the bandwidth usage on the front-end links of the storage and the storage cache “pressure” by giving more free resources back to the storage (in the flash cluster overview you can see the saved IOPS and bandwidth on your storage).

About the cost it really depend on what you using and also if mixed Flash are permitted, like in this example, using similar Flash on all cluster’s hosts is recommended in order have consistent performance.

Also you have to plan carefully your networking in order to minimize the latency in redundant write back implementation, and this could increase the cost.

Of course also the throughput of the local Flash must be taken in consideration, but cache could be better if bigger (of course must also be fast enough), so PCIe or SSD deployment could be choose depending also the budget (on SSD you just need a “dummy” SAS controller so could be cheap to implement).

And you need to understand clearly the limitation of this solution (and in general in host cache solutions): data in the cache must be populated, so you need a storage with reasonable performance. And depending of your workload type, maybe the host cache may be not so effective.

Specific consideration about FVP are also:

- It works only with vSphere 5.x, that may be a limit in a multi-hypervisor scenarios, but it good for each VMware customers, considering that it does not require only the Enterprise Plus edition of vSphere 5.5.

- Template, ISO and powered off VM are not accelerated: so deploying VM from template could not be so fast of clone a powered on (accelerated) VM. Also linked clone in View deployment must be planned well on faster storage because those blocks seems that are not accelerated.

- Used flash disks are not marked as used: so you have to threat them carefully and not trying to create a datastore on it.

- Depending on the license you can use more cache: this could be useful to implement different type of policy according of the type of cache.

- Licensing activation is little boring, but I’ve used the offline activation and it works reasonable.

- Actually (but see the following note) only block based storage could be accelerated. NFS datastore (in 1.5 version) could not be accelerated:

- FVP is almost compatible with vSphere functions, but I’ve got some issues in some cases with Storage vMotion. A better documentation for the CLI could be nice in order to found the required commands to clear and fix the status of VMs (or datastores) with pending write back activation.

- For write back you need that all the hosts in the cluster have an active Flash cache, but host maintenance could be handles correctly (DPM seems not, at least not during the creation of the Flash Cluster).

What’s new in the next release?

Thursday 24th April, during the #SFD5, PernixData made a a big announcement in its solution development: PernixData Introduces New FVP Features to Accelerate any VM, on any Host, with any Shared Storage System.

You can found more information on a Duncan Epping post (PernixData feature announcements during Storage Field Day) but mainly the big news are:

- Support for NFS

- Network Compression

- Distributed Fault Tolerant Memory

- Topology Awareness

So really a lot of interesting stuffs and several post to write in the future. The product it’s already cool, but the new features will make it a must for any virtualized environment.