The Intel® Xeon® CPU Max Series is designed to maximize bandwidth with the new high-bandwidth memory (HBM).

This processo is architected to unlock performance and speed discoveries in data-intensive workloads, such as modeling, artificial intelligence, deep learning, high performance computing (HPC) and data analytics.

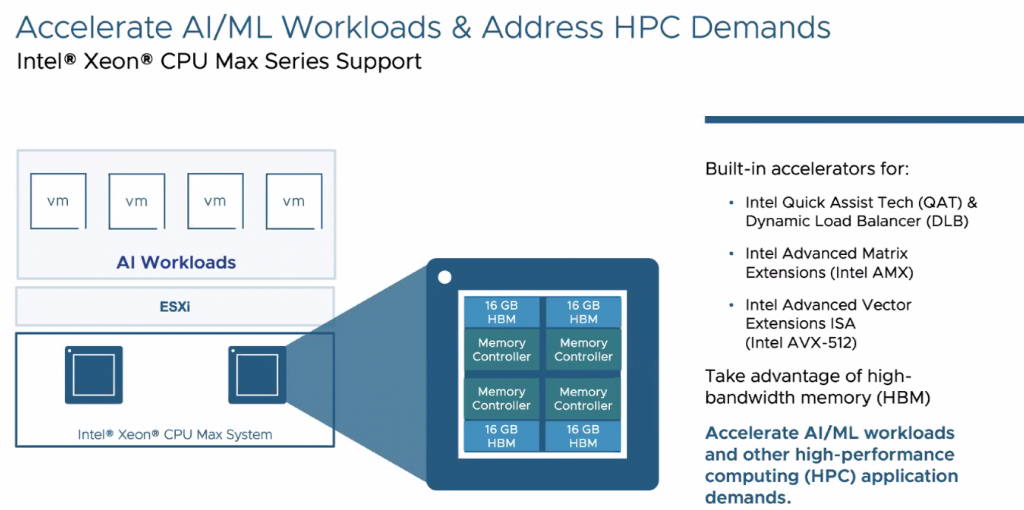

The Intel Xeon CPU Max Series features a new microarchitecture and supports a rich set of platform enhancements, including increased core counts, advanced I/O and memory subsystems, and built-in accelerators that will speed delivery of life-changing discoveries. Intel Max Series CPUs feature:

- Up to 56 performance cores constructed of four tiles and connected using Intel’s embedded multi-die interconnect bridge (EMIB) technology, in a 350-watt envelope.

- 64 GB of high bandwidth in-package memory, as well as PCI Express 5.0 and CXL 1.1 I/O. Xeon Max CPUs will provide memory (HBM) capacity per core, enough to fit most common HPC workloads.

- Up to 20x performance speed-up on Numenta AI technology for natural language processing (NLP)

with HBM compared to other CPUs.

Also it enables fast discoveries and more effective research. With the Intel Xeon CPU Max Series and 4th Gen Intel Xeon Scalable processors, you gain the performance and power efficiency required for the most challenging workloads and the most built-in accelerators of any CPU on the market. Achieve more efficient CPU utilization, lower electricity consumption and higher ROI with key accelerators for HPC and AI workloads, including:

- Intel Advanced Matrix Extensions (Intel AMX) — Significantly accelerate deep learning inference and training on the CPU with Intel® AMX, which boosts AI performance and delivers 8x peak throughput over AVX-512 for INT8 with INT32 accumulation operation.

- Intel Data Streaming Accelerator (Intel DSA) — Drive high performance for data-intensive workloads by improving streaming data movement. With Intel® DSA, achieve up to 79% higher storage I/O per second (IOPS) with as much as 45% lower latency when using NVMe over TCP.

- Intel Advanced Vector Extensions 512 (Intel AVX-512) — Accelerate performance with vectorization to contribute faster calculations on larger data sets for scientific simulations, AI/deep learning, 3D modeling and analysis, and other intensive workloads. Intel® AVX-512 is the latest x86 vector instruction set to accelerate performance for your most demanding computational tasks.

I/O and memory subsystem advancements including:

- DDR5 — Improve compute performance by overcoming data bottlenecks with higher memory bandwidth. DDR5 offers up to 1.5x bandwidth improvement over DDR4.4

- PCI Express Gen 5 (PCIe 5.0) — Unlock new I/O speeds with opportunities to enable the highest possible throughput between the CPU and devices. 4th Gen Intel Xeon Scalable and Intel Xeon Max Series processors have up to 80 lanes of PCIe 5.0, double the I/O bandwidth of PCIe 4.0.4

- Compute Express Link (CXL) 1.1 — Gain support for high-fabric bandwidth and attached accelerator efficiency

Intel Max Series CPUs offer flexibility to run in different memory modes, or configurations, depending on the workload characteristics:

- HBM-Only Mode — Enabling workloads that fit in 64GB of capacity and ability to scale at 1-2 GB of memory per core, HBM-Only mode supports system boots with no code changes and no DDR.

- HBM Flat Mode — Providing flexibility for applications that require large memory capacity, HBM Flat mode provides a flat memory region with HBM and DRAM and can be applied on workloads requiring >2 GB of memory per core. Code changes may be needed.

- HBM Cache Mode — Designed to improve performance for workloads >64GB capacity or requiring >2GB of memory per core. No code changes required, and HBM caches DDR.

The new VMware vSphere 8.0.3 will add support to this processo and its new features.