One important concept of virtual networking is that the virtual network adapter (vNIC) speed it’s just a “soft” limit used to provide a common model comparable with the physical world.

For this reason, the Intel e1000 and e1000e vNIC can reach a real bandwidthbigger than the canonical1 Gpbs link speed.

But what about the vmxnet3 vNIC that can advertise also the 10 Gpbs link speed. What is the real bandwidth of this adapter?

If you search on Internet you can found a lot of comparisons between vmxnet3 and other adapters (like e1000 and e1000e) with also a lot of benchmarks and numbers:

- VMXNET3 vs. VMXNET2 Performance Shootout

- E1000, E1000E and VMXNET3 performance test

- CHOOSING A VMWARE NIC: SHOULD YOU REPLACE YOUR E1000 WITH THE VMXNET3?

- VMXNET3 vs E1000E and E1000

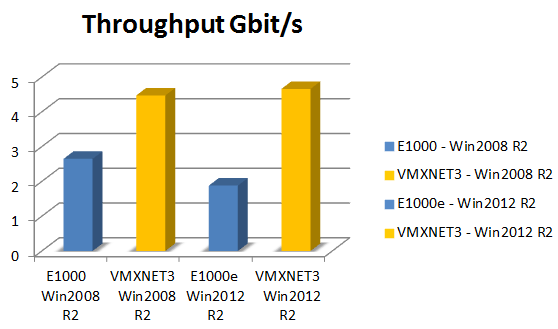

If you look at the depth comparison from Rickard Nobel. you can found some very low numbers:

The post is 6 years old and also the OSes are not the latest… and was not used Linux to see if there is some difference.

Other tests seem to demonstrate that the vmxnet3 can reach much easily the 10 Gbps limit, like in this blog post:

But lot of customers have now faster physical networks, with 25Gbps, 40 Gbps or also 100 Gbps… How does the vmxnet3 perform over the 10 Gbps soft limit?

But there is also an important aspect to consider: the different speed that you can have of the same adapter in different scenarios.

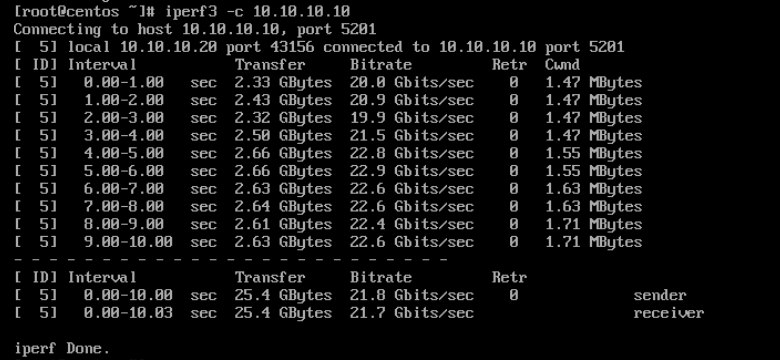

The first big difference is related to the internal network speed on a virtual switch: if two VMs are on the same host on the same virtual switch on the same port group, the speed can be totally different:

As you can see the vmxnet3 can reach more than 20 Gbps in this scenario.

I’ve tested between two CentOS8 VMs running on distribuited virtual switches on vSphere 6.7 host (with Xeon Gold CPUs). But the interest aspect is the speed that you can reach making the VM affinity rule very interesting in case of VMs very chatty between themself.

Much more complicated is test the external speed because there are a lot variable that can make the difference: the host type (expecially the CPU), the physical NIC (pNIC) type and chipset, the external switches, ….

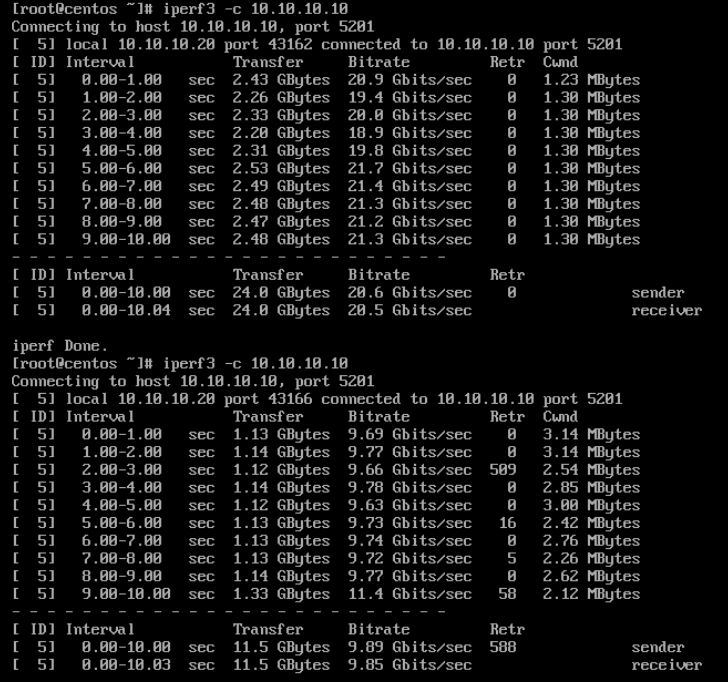

For the external speed test, I’ve just test the speed between two VMs between two hosts connected on a 100 Gbps network, in this way the external network is not the bottleneck.

From a short test, what I found is a big difference between the type of pNIC.

The first test was between two host with a Mellanox pNIC, the second test between two host with an Intel pNIC:

Is the 20 Gbps speed the real speed limit of vmxnet3? Anyway it is an interesting speed of a single VM that can be good enough in most cases.

Note that I’ve not tested on Windows OS, to see if there are some differences and I’ve not yet tested the Paravirtual RDMA vNIC type.

Other interesting test could be using different teaming options, but I don’t belive that a single 100 Gbps physical link could be a constrain in this type of tests.