This post is also available in: Italian

Reading Time: 8 minutesAfter the new Virtual Volumes, the most “spoilered” features of the new VMware vSphere 6.0 was probably the new vMotion that allow VMs to move across boundaries of vCenter Server, Datacenter Objects, Folder Objects and also virtual switches and geographical area.

VMware vMotion was probably the first important virtualization related features that make important VMware (and its product) but, much important, that make relevant the virtualization approach: having VM mobility means handle planned downtime and also workload balancing.

Now VMware reinvent vMotion to become more agile, more cloud oriented: breaking the boundaries and going outside the usual limit make possible have VM mobility across clouds. Note that actually it’s not (yet) possible use this feature to live move to or from a vCloud Air service… but of course this is the first step to do this in the future.

When also all VM properties and policies can “follow” the VM (and the VMware SND and SDS approaches are going in this direction) will be really possible implement in a easy way the “one cloud” vision of VMware.

The history of vMotion enhancements is quite long, but most of the interesting new has appear in vSphere 5.x:

- v5.0: with the Multi-NIC vMotion, Support for higher latency networks (up to 10ms) and Stun During Page Send (SDPS) features

- v5.1: with the vMotion also without a shared storage

- v5.5: improvements in metro-cluster

Now with vSphere 6.0 there are new vMotion scenarios:

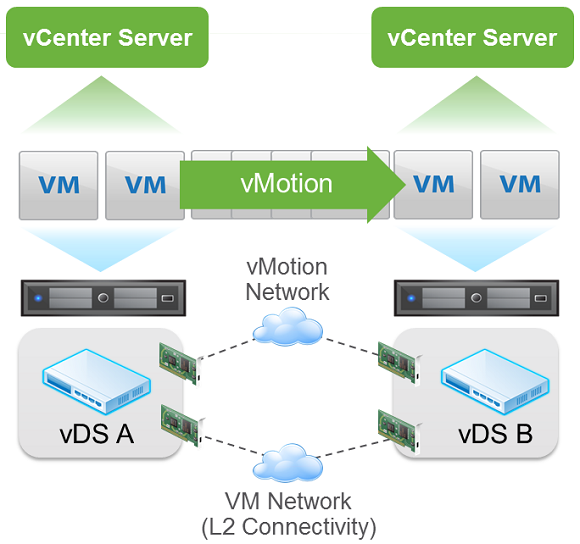

- Cross vSwitch vMotion: Allows VMs to move between virtual switches in an infrastructure managed by the same vCenter. This operation is transparent to the guest OS and works across different types of virtual switches (vSS to vSS, vSS to vDS, vDS to vDS).

- Cross vCenter vMotion: Allows VMs to move across boundaries of vCenter Server, Datacenter Objects and Folder Objects. This operation simultaneously changes compute, storage, network and vCenter.

- Long Distance vMotion: Enable vMotion to operate across long distance (in vSphere 6.0 beta, the maximum supported network round-trip time for vMotion migrations is 100

milliseconds).

Note that all those operations still requires an L2 network connectivity just because it’s a VM migration that does not change the IP of the VM (otherwise we are talking about of site DR scenarios, and in those case, for example SRM, could still be a solution). Seems a problem, expecially for the cases across datacenters (in in some large environments IP change can also appen across racks), but SDN (and for VMware NSX) is the solution for “virtualize” the network layer.

And also remember that a live migration requires that the processors of the target host provide the same instructions to the virtual machine after migration that the processors of the source host provided before migration. Clock speed, cache size, and number of cores can differ between source and target processors. However, the processors must come from the same vendor class (AMD or Intel) to be vMotion compatible.

So in all those migration scenarios a common EVC baseline is usually required (note: do not add virtual ESXi hosts to an EVC cluster because virtualizaed ESXi are not supported in EVC clusters).

There are also addition requiremens both for hosts and VM but are almost similar than previous version of vMotion (see the vCenter and Host Management Guide for more information). For example:

- Each host must be correctly licensed for vMotion

- Each host must meet shared storage requirements for vMotion

- Each host must meet the networking requirements for vMotion

It’s curios that in the actual documents (but it’s still a RC and not the really final version), in the best practices for vMotion networking paragraph now is suggested to use jumbo frames for best vMotion performance (let’s see if it will be confirmed also in the final version).

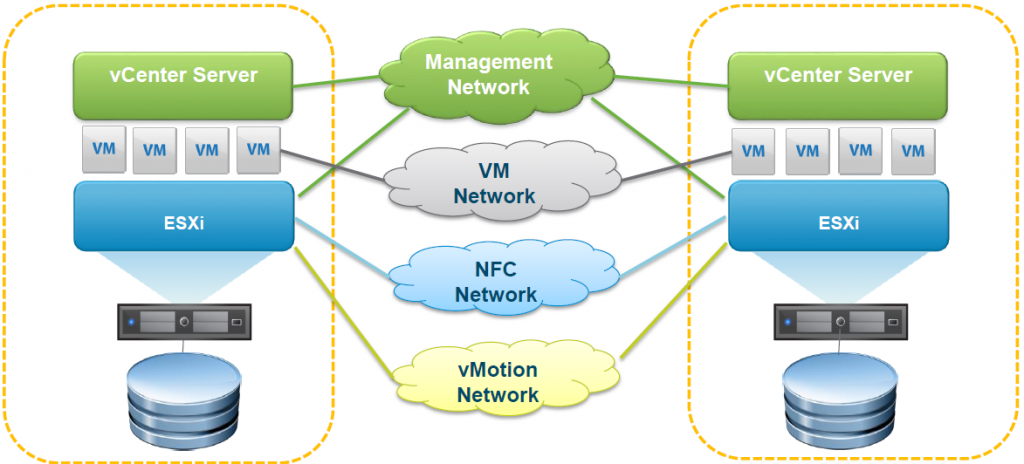

There are also several other improvements starting with an increased vMotion network flexibility: vMotion traffic is now fully supported over an L3 connection. Now vMotion network will cross L3 boundaries and NFC network, carrying cold traffic, will be configurable.

But there are also several improvements also at VM level in order to provide more flexibility and mobility. For example:

- You can migrate virtual machines that have 3D graphics enabled. If the 3D Renderer is set to Automatic, virtual machines use the graphics renderer that is present on the destination host. The renderer can be the host CPU or a GPU graphics card. To migrate virtual machines with the 3D Renderer set to Hardware, the destination host must have a GPU graphics card.

- You can migrate virtual machines with USB devices that are connected to a physical USB device on the host. You must enable the devices for vMotion.

- You can migrate Microsoft Cluster virtual machines. Note that these VMs will need to use physical RDMs and only supported with Windows 2008, 2008 R2, 2012 and 2012 R2.

But let’s go back to the most biggest changes in vMotion: vMotion across vCenter Servers and the long distance vMotion.

Cross vCenter vMotion

As written this operation simultaneously change compute, storage, networks, and management and can be used for local, metro, and cross-continental distances.

Properties of vMotion across vCenter Server instances:

- VM UUID maintained across vCenter Server instances (not the same as MoRef or BIOS UUID)

- MAC Address of virtual NIC is preserved across vCenters and remain always unique within a vCenter. Note that are not reused when VM leaves vCenter

- VM historical data preserved: Events, Alarms, Task History

- Solution interoperability maintained:

- HA properties preserved

- DRS Affinity/Anti-Affinity Rules

The second point is the most interesting but will have also some possible design impact: how ensure that two different vCenter will generate differen MAC Address ranges for their VMs? As Rick Schlander has suggest on Twitter, the suggest way is use KB 1024025 (Virtual machine MAC address conflicts or have a duplicate MAC Address when creating a virtual machine) and be sure to provide different vCenter Server instance ID.

Requirements for vMotion across vCenter Server instances:

- vCenter 6.0 and greater with ESXi Enterprise Plus (according to the comparison tables)

- Same SSO domain for destination vCenter Server instance using UI; different SSO domain are possible using API (see this post).

- 250 Mbps network bandwidth per vMotion operation

- L2 VM network connectivity

Long-distance vMotion

Mainly it’s just a specific case of previous operation where you move the VM across Counties. Requirements and properties remain the same of previous case.

Especially you have to consider those specific network requirements for concurrent vMotion migrations:

Especially you have to consider those specific network requirements for concurrent vMotion migrations:

- You must ensure that the vMotion network has at least 250Mbps of dedicated bandwidth per concurrent vMotion session. Greater bandwidth lets migrations complete more quickly. Gains in throughput resulting from WAN optimization techniques do not count towards the 250Mbps limit.

- In vSphere 6.0, the maximum supported network round-trip time (RTT) for vMotion migrations is 100 milliseconds.

Both numeric data came from the last available documents that refer to RC version and not the final version… So maybe can change, but for sure the bandwidth requirement may limit more than the latency requirement, considering also that round-trip times that we see in the real world are, for example:

- 73ms from New York to San Francisco (4100km)

- 80ms from New York to Amsterdam (5900km)

Other networking requirements are:

- If it’s also cross vCenter, then the two vCenters must be connect via L3 (or L2)

- VM network must be the same L2 connection (same VM IP address available at destination)

- vMotion network: could also be and L3 connection, but must be secure (dedicated or encrypted) and with the previous requirements about bandwidth and latency

- NFC network: could be routed L3 through Management Network or L2 connection

Interesting are the proposed use cases of this type of migration:

- Permanent migrations: moving workload across clouds

- (Planned) Disaster avoidance: could be interesting see if next version of SRM will use this feature for planned failover

- Multi-site load balancing: this could be interesting in the future (or for a possible geo-DRS)

- Follow the sun: this is quite interesting and could make sense for who is working in different timezone, or potentially for other cases (like fresh air datacenters where you try to keep the workload on the most energy efficient)

See also

For more information see also: