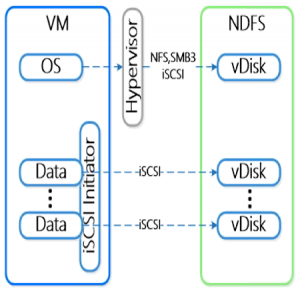

Historically, Nutanix was built around iSCSI but then they have add the ability to run NFS as well as other front-end IP based protocols. Starting from version 2.5, NFS/NDFS has become the preferred protocol for the Nutanix cluster.

Although the Nutanix Distributed File System (NDFS) is stretched across all nodes and build on the pool of each local storage resource, all I/O for a VM is served by the local CVM. The storage can be presented via iSCSI, NFS or SMB3 (introduced in NOS 3.5) to the hypervisor from the CVM (not only the local, but also from all CVMs).

The iSCSI protocol has not gone, and it’s still present, simple it’s no more the preferred. But with NOS 4.1.3 (released on June, 15th) there is a new important feature related to iSCSI support:

The iSCSI protocol has not gone, and it’s still present, simple it’s no more the preferred. But with NOS 4.1.3 (released on June, 15th) there is a new important feature related to iSCSI support:

- Tech Preview of in-guest ISCSI support – We are very happy to announce tech preview of in-guest ISCSI support to add Nutanix vDisks into Windows guests by using the native Microsoft iSCSI software initiator adapter.

Instead of use hypervisor storage features and pass its storage layers, a guest iSCSI disks is just a passthrough to the underlying storage level and a direct connection to a vDisk object.

Why is needed or why should be important? Considering that NFS is the recomened protocol for VMware datastore you can have “unsupported” workloads, like Microsoft Exchange that is not supported on NFS. With in-guest ISCSI support Exchange will now be officially supported on Nutanix with VMware vSphere environments.

Another interesting uses cases is guest failover clustering when you need a one or more shared block devices.

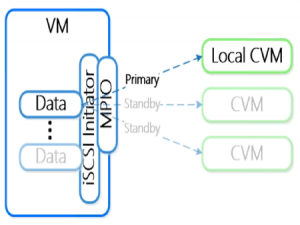

But the most interesting aspect is how is handled the data locality (in order to use the local CVM as a preferred data path), but also the resiliency of the vDisk in case the local data are not available.

In order to manage both cases, Microsoft MPIO is configured with the primary path pointing to the local CVM and all the other CVM as standby paths. In this way, in case of a local CVM failure, MPIO will take care of the path switch (usually in around 16 sec) without distript the I/O of the guest.

During the technical preview, volumes must be configured with API, but when this feature will become GA all will be integrated in the common interfaces.

As you can notice, actually the guest iSCSI is designed for Windows VMs and the user cases are the two described before (specific workloads for support and guest clustering).

It’s also possible with Linux VMs or other OSes? Theoretically yes, but the scripts does not configure the guest iSCSI initiator with the proper path preferences. Anyway, at this stage, only Windows guest OSes are supported.