During the second day of the third edition of Virtualization Field Day (#VFD3) one of the company that we (as delegates) met was Coho Data that is a (new) cool company in the big storage arena. Their beginnings as Convergent.io with a specific goal of shifting the way that storage is built and managed. This session also match the announcement of the General Availability (GA) of their Web-Scale Storage Appliance for the Enterprise.

During the second day of the third edition of Virtualization Field Day (#VFD3) one of the company that we (as delegates) met was Coho Data that is a (new) cool company in the big storage arena. Their beginnings as Convergent.io with a specific goal of shifting the way that storage is built and managed. This session also match the announcement of the General Availability (GA) of their Web-Scale Storage Appliance for the Enterprise.

Formally they are a software company, but the solution is a storage appliance already packet with both the hardware part and the software part (there are different reasons for this choice, ma mainly for simplify the HCL and have predictable performance in their scaling approach). The entire architecture is well described in their web site or in the #VFD3 video.

Modular solution…

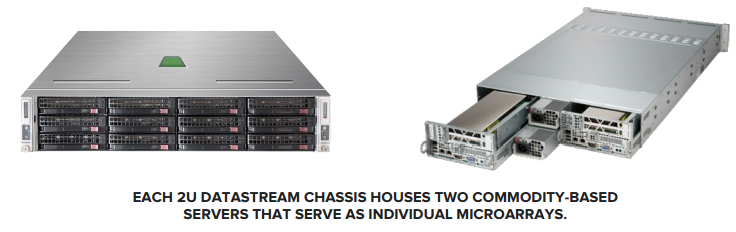

The DataStream Chassis is a balanced Storage Building block composed by a 2U rack composed with two MicroArrays and storage. Each Chassis delivers 39TB of storage capacity and can be scaled one MicroArray at a time so you buy only what your business needs when you need it. Each single MicroArray is based on:

The DataStream Chassis is a balanced Storage Building block composed by a 2U rack composed with two MicroArrays and storage. Each Chassis delivers 39TB of storage capacity and can be scaled one MicroArray at a time so you buy only what your business needs when you need it. Each single MicroArray is based on:

- Two Intel Xeon E5-2620 Processors

- Two Intel 910 Series 800GB PCIe Flash Cards

- Six 3TB SATA disks

- Two 10Gb NICs

The solution is defined as “Open Hardware” based, than mean mainly Open, Commodity Components that are mainly a Supermicro high density system with two Intel servers and storage (not shared between the server, but just partitioned). The choice to use the 2 servers version and not the 4 servers (still in 2U) is mainly related to the number of PCI-e slots available. Also the solution is called “Open Hardware” because Coho Data’s MicroArray model implement a data hypervisor that does for enterprise storage what a VMM does for a CPU: it is a minimal, high-performance layer that allows tenants to safely share storage hardware with isolated performance.

…With Scale-Out approach

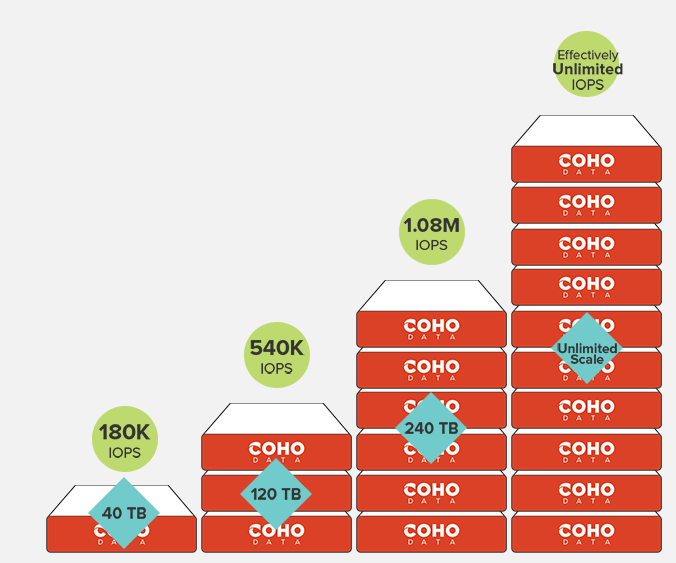

Coho Data it’s a real scale-out storage solution with a predictable and linear rate scaling: each unit can provide 180K IOPS (80% read/20% write, 4K random) and you can scale as you want (next year probably a new hardware model will be provided with more performance in this case you will scale still linearly with the model).

…Fully converged

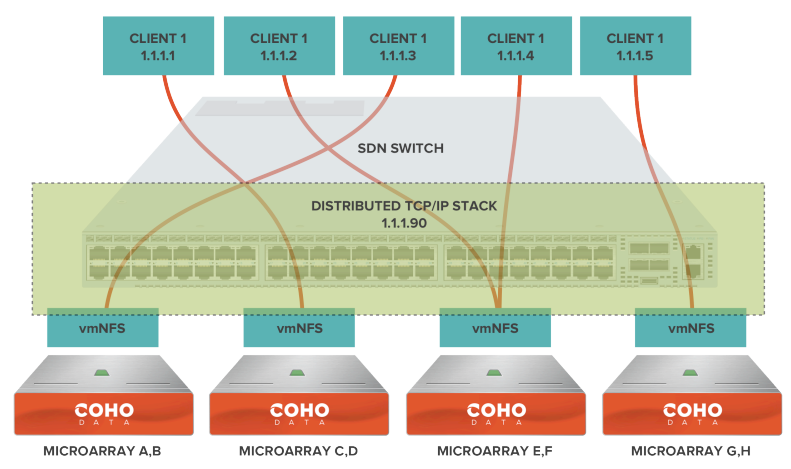

This solution is not only a Software Defined Storage (SDS) solution, but also include inside of it a Software Defined Network (SDN) approach that actually it’s almost unique: the entire DataStream solution is composed with (at least) one DataStream SDN-enabled 10GbE Switch and (at least) a 2U DataStream Chassis with two MicroArrays.

The provided switch is a “rebranded” Arista switch running OpenFlow that is used both to simplify the deployment and to provide the right data path for the storage flow.

If could be simple understand how SDN helps in deployment and in the management aspects (management, including switch firmware upgrade, could be done from a single interface), more words are needed on how SDN is used for the data path selection.

Actually Coho Data expose a single NFS name space (other protocols are in the roadmap) but it’s quite unique on how is used: to simplify the configuration and permit a good scalable approach a single IP address is provided on the storage side: using SDN a single 10 Gbps path is selected for each single client (client on the storage network side, but usually servers or virtualization hosts). In case of link failure another path will be selected.

This sound really crazy (or at least strange), but it’s important understand that each NFS inteface on storage side does not need to talk with other interfaces. For the microarray communication (for example for management and data redoundancy) a private network (not drawned in the previous schema) is used. In this way the entire network architecture make sense and become powerful and elegant.

Of course there is not need (or at least is not mandatory) use SDN also of the rest of the networking.

And, of course, the switch could be redoundant with a second switch, and in this case SDN will be used also to configure the connection and the redoundancy across the two switches, both to define the LAG, but also to define the optimal and low cost path from the client to the microarray.

…Hybrid and not full flash

This product try to find a solution of two big storage problems:

- problem 1: flash pricing is not disk pricing

- problem 2: host-side flash isn’t a panacea

Too many solutions allow you to put SSD into a traditional drive slot but then cannot take advantage of that performance. Coho Data has run extensive tests both on SSD and PCI flash. What they found is that an Intel PCIe flash costs $5/GB which provides $.08/IOP (given an 800GB flash, providing 50K IOPS, at 0.1 latency, and costing $4K). On the other side, a standard 3TB drive costs $.08/GB which provides $1.67/IOP (given 150 IOPS, 10ms latency, at a $250 price). For this reason they choose an hybrid approach to use the best of both worlds.

But other aspects must also be considered: caching and tiering are different and there isn’t one better than another in all cases (also, in a more deep analysis there are different type of cache policies and different type of tiering policies). Coho Data use a special flash-first tiering and flash tuned in order to gain optimal and determinist performance.

…It’s a cool solution. Isn’t it?

Do you think that the entire solution is pretty cool? For sure I think that it’s almost unique for both the SDS and SDN approach tighten together!

And for sure was really cool ear about their solution directly from Andrew Warfield CTO and Forbes Guthrie, Technical Product Manager at Coho Data, but also know as an author, speaker, blogger. Both presentations were really clear and deep.

And VAAI will be supported in the GA that actually target the VMware vSphere virtualization market (but in the future will provide also other cases in order to be not a VMware related only solution or a virtualization related only solution).

And last but not least is really easy to be managed but also fast to be deployed: once racked, the DataStream solution installs in under 15 min from power on to being fully useable for all your application storage needs.

…No weakness at all?

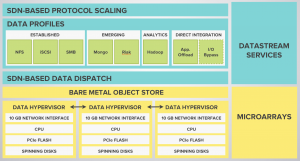

Of course this is a 1.0 GA version so could not be completely perfect in all aspects. In this version actually is focused mainly on VMware and could expose only NFS3. But the design is able to exposing different protocols and different paths via libdatapath:

In the roadmap there are already iSCSI and SMB but other datastream services could be added.

Also actually Coho Data not seeing big Hyper-V among their prospects yet, but they’ll keep it in mind for future roadmap. More interesting is on Openstack: if you have an Openstack project and you are interested in Coho Data, just contact them.

Another limit is in how work the SDN part in path management: in order to have two active path on the ESXi side (that could be common considering that you may have two 10 Gbps NIC cards for redundancy) you need to have another NFS datastore on a different IP address. But in GA we can have a maximum of only 1 datastore, in next version more datastore could be possible.

More information

See also:

- Field Day delegates descend on Coho Data

- Coho Data video at #VFD3

- Tech Field Day VFD3 – Coho Data – Coho-ly Moly this is a cool product!

- Initial Musing about Coho Data Scale Out Networking

- Don’t Let Raw Storage Metrics Solely Drive Your Buying Decision

- Designing the Next Generation vSphere Storage Systems

- #VFD3 Day 2 : Kicking it with Coho

Disclaimer: I’ve been invited to this event by Gestalt IT and they will paid for accommodation and travels, but I’m not compensated for my time and I’m not obliged to blog. Furthermore, the content is not reviewed, approved or published by any other person than me.