Fusion-IO is a well-know company in the host-side flash solutions to accelerates databases, virtualization, cloud computing, big data, and the applications without change your storage. Their In-Server Acceleration products are impressive (sometime also in the price) and can provide up to 10.24TB of flash to maximize performance for large data sets, or also solutions for blade server (with the ioDrive2® Mezzanine).

Thanks to Fusion-IO Italy I’ve got the opportunity to test thee Fusion-io 410GB ioScale, the smallest model of this product line (ioScale products use MLC technology and are in these capacities: 410GB, 825GB, 1650GB, 3.2TB), but enough to provide good performance and enable my environment to do some tests (anyway they are the second Generation ioScale Device).

Note that there are also several software layer in order to provide also high level functions, like ioTurbine Virtual, ioCache, ioVDI, … but of course you can use the server cards also with other 3rd part software that can manage flash storage like this card or local SSD disks.

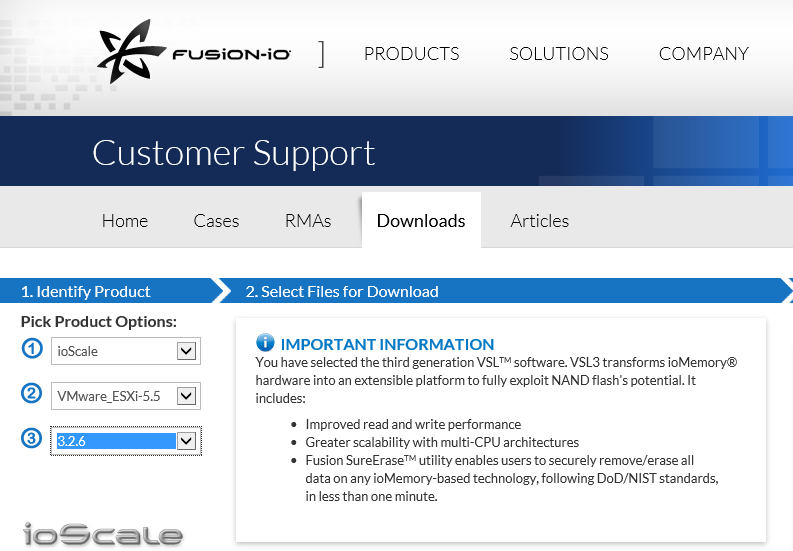

After you have installed the cards in your hosts (really important is use recent hosts with faster PCI-e bus and follow the installation guide for the notes about power management and temperature control) you have to add some drivers. Those can been obtained from the Fusion-IO support site (and you will need to register and account and a product in order to start the download):

For VMware ESXi-5.5 the last version is 3.2.6, but there are also drivers also for other hypervisors or just for physical servers.

You can verify that the Fusion-IO cards are installed if you can see them in the PCI bus of the host:

# lspci | grep Fusio

0000:41:00.0 Mass storage controller: Fusion-io ioDrive2 [fioiom0]

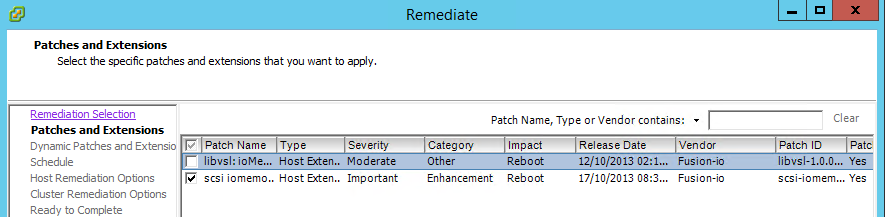

At this point you can add the drivers and the library of the cards. One simple way is just use the VMware Update Manager (VUM) and import the two required files in the repository and then add to a baseline.

Remediation must done in sequence: first the driver part as in the remediation selection.

And then the library package.

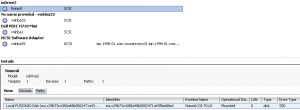

At this point you can see a new storage adapter with the corresponding block storage (type SSD):

You can start using it as a local disk (if you want make some speed tests) using the ioMemory device as VMFS datastore within the hypervisor, and then sharing that storage with guest operating systems. Guest operating systems can be 32-bit or 64-bit because they are not directly using the ioMemory device. Another option is using VMDirectPathIO, allow a virtual machine to directly use the ioMemory device. In this case, only supported operating systems can use the device and if you are using VMDirectPathIO, you do not need to install the ioMemory VSL software on the ESXi system.

But the must interesting use is as a host cache with caching software, or a support for some Virtual Storage Appliance (VSA) or some kind of Software Defined Storage (SDS). In next posts I will describe some tests with PernixData and VSAN.

On the hosts side some new CLI command were also add (in the /opt/fio/bin directory). The most insteresting are the one related with the reformattation of the flash by choosing a different block size: some OSes still need a 512 Byte block size and can have some performance issues with the new 4k standard (note that ioFX devices are pre-formatted with 4kB sector sizes; all other ioMemory devices are formatted to 512B sectors when shipped). According with the documentation for ESXi the recomented block size is 512B.

/opt/fio/bin # fio-format -h

Fusion-io format utility (v3.2.6.1219 pinnacles@23215236e91a)

usage: fio-format [options] <device>

[B,K,M,G,T,P,%] are <u>nits: Bytes, KBytes, MBytes, GBytes, TBytes, PBytes and percent.

Options:

-b, --block-size <size>[B,K] Set the block (sector) size, in Bytes or KiBytes (base 2).

-s, --device-size <size>[<u>] Size to format device where max size is default capacity.

-o, --overformat <size>[<u>] Overformat device size where max size is maximum physical capacity.

-P, --enable-persistent-trim Make persistent trim feature available on the device.

-R, --slow-rescan Disable fast rescan on unclean shutdown to reclaim some capacity.

-f, --force Force formatting outside standard limits.

-q, --quiet Quiet mode - do not print progress.

-y, --yes Auto answer 'yes' to all confirmation requests.

-v, --version Print version information.

-h, --help Print the help menu.

Bus speed could be checked with a specific command (or also by the next one):

/opt/fio/bin # fio-pci-check -v

Root Bridge PCIe 2000 MB/sec needed max

ioDrive 00:41.0 (2001) Firmware 0

Current control settings: 0x283e

Correctable Error Reporting: disabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Payload size: 256

Max read size: 512

Current status: 0x0000

Correctable Error(s): None

Non-Fatal Error(s): None

Fatal Error(s): None

Unsupported Request(s): None

link_capabilities: 0x0000f442

Maximum link speed: 5.0 Gb/s per lane

Maximum link width: 4 lanes

Slot Power limit: 25.0W (25000mw)

Current link_status: 0x00000042

Link speed: 5.0 Gb/s per lane

Link width is 4 lanes

Current link_control: 0x00000000

Not modifying link enabled state

Not forcing retrain of link

Most information could be gain by the fio-status command that has several useful options to limit the visualization only to errors and related messages (-e) or just dump the all available information (-a)

/opt/fio/bin # fio-status -a

Found 1 ioMemory device in this system

Driver version: 3.2.6 build 1219

Adapter: ioMono

Fusion-io 410GB ioScale2, Product Number:F11-003-410G-CS-0001, SN:1345G0233, FIO SN:1345G0233

ioDrive2 Adapter Controller, PN:PA005004005

External Power: NOT connected

PCIe Bus voltage: avg 11.93V

PCIe Bus current: avg 0.69A

PCIe Bus power: avg 8.28W

PCIe Power limit threshold: 24.75W

PCIe slot available power: unavailable

Connected ioMemory modules:

fct0: Product Number:F11-003-410G-CS-0001, SN:1345G0233

fct0 Attached

ioDrive2 Adapter Controller, Product Number:F11-003-410G-CS-0001, SN:1345G0233

ioDrive2 Adapter Controller, PN:PA005004005

SMP(AVR) Versions: App Version: 1.0.13.0, Boot Version: 1.0.4.1

Powerloss protection: protected

PCI:41:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001

Firmware v7.1.15, rev 110356 Public

410.00 GBytes device size

Format: v500, 800781250 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 52.66 degC, max 59.55 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 2.00 PB, 100.00% remaining

Lifetime data volumes:

Physical bytes written: 45,701,644,712

Physical bytes read : 22,004,442,720

RAM usage:

Current: 153,816,384 bytes

Peak : 3,351,972,480 bytes

Contained VSUs:

fioiom0: ID:0, UUID:c29b73cc-088a-48b0-b47a-a5a435be88ed

fioiom0 State: Online, Type: block device

ID:0, UUID:c29b73cc-088a-48b0-b47a-a5a435be88ed

410.00 GBytes device size

Format: 800781250 sectors of 512 bytes

Block size, bus speed, storage usage could all been monitored by this command. Note the internal temperature caused mainly by the form factor of the server (in this case was a R620 1U, on a closest R720 with 2U the reported temperature was: 42.33 degC, max 47.74 degC).

And there is also the fio-update-iodrive in order to update the firmware of the cards.

Note also that you can found on the company site a nice video with a A Brief History of NAND Flash Storage.