Reading Time: 3 minutes

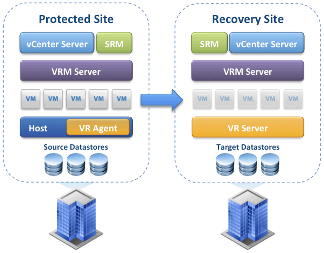

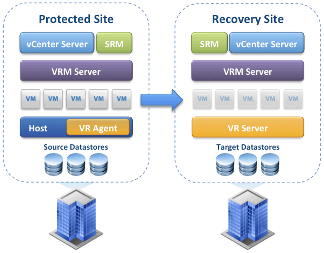

Reading Time: 3 minutes One of the interesting feature of VMware SRM 5.0.x is the vSphere Replication (VR) technology that is a VM a replication engine (part of SRM 5.0 and that also requires ESXi 5.0 and later) to implement protecting and replicating virtual machines between sites without the need of storage array–based replication (that usually it’s costly and too much vendor dependent).

One of the interesting feature of VMware SRM 5.0.x is the vSphere Replication (VR) technology that is a VM a replication engine (part of SRM 5.0 and that also requires ESXi 5.0 and later) to implement protecting and replicating virtual machines between sites without the need of storage array–based replication (that usually it’s costly and too much vendor dependent).

It use different elements:

- VRA (vSphere Replication agent): included in ESXi starting from v5.0

- VRMS (vSphere Replication Management Server): one virtual appliance (VA) for each site to handle the communication

- VRS (vSphere Replication Server): one virtual appliance (VA) on the DR side that is just the “target” of the VR agent

The deployment of all those VA could be simple handled from the SRM plugin.

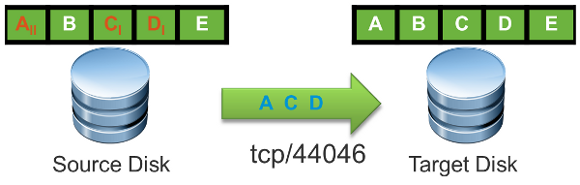

How VR determines what is different and what needs to be replicated

There are two forms of synchronization that VR will use to keep systems synchronized:

- “Full synch”: that happens usually just on the first pass when the VM is configured for VR, but can also happen occasionally during other situations such as when recovering from a crash.

When VR is first configured for a virtual machine you can choose a primary disk file or set of disk files and a remote target location to hold the replica. This can be an empty folder, or it can be a copy of the VMDK that has the same UUID as the primary protected system.The first thing VR will do when synchronizing is read the entire disk of both the protected and recovery site and generate a checksum for each block.

Then it compares the checksum mapping between the two disk files and thereby creates an initial block bundle that needs to be replicated on the first pass to bring the block checksums into alignment. This happens on port 31031. - “Lightweight delta”: the ongoing replication is by use of an agent and vSCSI filter that reside within the kernel of an ESXi 5.0 host that tracks the I/O and keeps a bitmap in memory of changed blocks and backs this with a “persistent state file” (.psf) in the home directory of the VM. The psf file contains only pointers to the changed blocks. When it is time to replicate the changes to the remote site, as dictated by the RPO set for the vmdk, a bundle is created with the blocks that are changed and this is sent to the remote site for committing to disk. This replication happens on port 44046.

For more information see also: